74. AI, ethics and the future - DFA talk special edition

Thanks again to DFA for having us all. Find more information about the event here: https://datasciencefestival.com/session/ai-ethics/

You can also watch the recording on their youtube channel here:

As well as our previous panel hosting session here:

- Ethics in Tech: The good, the bad and the ugly https://www.youtube.com/watch?v=COb4P4uL-4A

Transcription:

Transcript created using DeepGram.com

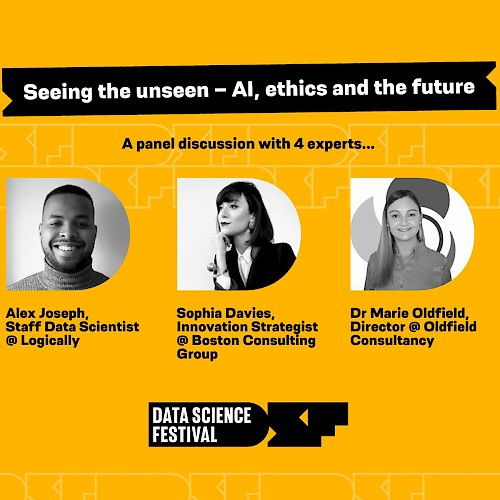

Hello, and welcome to this special edition of the Machine Ethics Podcast. This edition was recorded live on the 23rd February 2023. This recording was part of the Data Science Festival webinar series where I was chairing Seeing the Unseen, AI Ethics, and the Future. I was joined by Sofia Davis, Alice Thwaite, Alex Joseph, and doctor Marie Fordfield. This is an edited version of that talk, which you can find on their website at datasitesfestival.com and on their YouTube.

In this webinar, we talk about what is ethics, designing for responsible AI, ethics as innovation and a competitive advantage, ghost work, who checks the checkers, fairer AI, language as a human computer interface, cleaning up the web, and technologies that shouldn't be deployed, and much, much more. This was a really enjoyable and refreshing an episode an episode with doctor Marie Oldfield very shortly. Some of her comments and lightning talk at the beginning of this session have been edited, so she can talk about her thoughts in length in future episodes. As always, you can go to machinedashethics.net to find more episodes from us. You can contact us at hello at machinedashethics.net.

You can follow us on Twitter and Instagram, machine_ethics or machineethicspodcast. And if you can, you can support us by going to patreon.comforward/machineethics machineethics or leaving a review or thumbs up or 5 stars or whatever it is, wherever you get your podcast. Thanks very much for listening. And we'll have a normal interview episode coming very soon. Hi.

Hello. Welcome. So thank you for joining us. We're here with the Data Science Festival, AI Ethics and the Future. In my estimation, we're kind of already living in the future.

I don't know how you feel, recently with all the amazing AI developments. So it feels like tangibly things are moving, which is, both exciting and also really ethically challenging, which is why we're here today. This is, the next kind of session on AI ethics, that we've done with the Data Science Festival. There is actually another webinar next week on the 1st March, which is the subtle art of fixing silently failing machine learning models. So do check that out next Wednesday, I believe.

I am Ben Wyford. I'm joined by, Sophia, Alex, Alice, and Marie. I'm going to introduce them and they're going to be our panelists and lightning speakers for the day. So we are joined by just people who have this wealth of experience, passion and interest in AI, ethics, this kind of social impact philosophy, mathematics, technology, all these different things kind of boiling this pot together. So thank you for joining us.

I am going to first give over the mic to our first speaker who will be Sofia. So Sofia Davis is theoretical physicist, technologist, and she's also heading up the EcoDigital, Decarbonization Solutions with BCG Consulting Group. So if you'd like to kick us off, that'd be awesome. Thank you. Thank you very much, Ben.

Thanks for the warm welcome. It's great to have a growing audience by the looks of it. So it's great to have everyone joining us. Yeah. So as Ben said, I lead, climate innovation at BCG within our digital technology branch.

But my current sort of research passion is the topic of deep design. So today, I wanna talk to you a little bit about two sides of the coin, designing for and designing with responsible AI. So I think when we look at this kind of where I sit in the world and how I wear many hats, within my role at BCG and more broadly, my background is theoretical physics. I specialized a lot in complexity science. So I'm very driven by, looking at things from an emergent, kind of lens and understanding the radical interdependency of societies, economies, etcetera, which I think in the AI context is becoming ever more urgent to advocate for.

And then also I've got, experience in design and kind of the digital products and services lens, but moving more towards a kind of a transition aperture of design, which I'm gonna talk a bit about today. And then in the context of AI, looking at kind of the future of a collective intelligence for designing with AI. So the two sides of responsible AI was designing for more trustworthy AI. So that's looking at AI for society, addressing sort of data coloniality, and also looking at new research cultures. But then there's the other side, which I want to get into, which is more how do we use AI for systemic change?

And this is kind of the bleeding edge of AI innovation at the moment is better understanding from a systems lens, distributional impact, not just sort of aggregate impact. And also how do we encode societal values when we look at broader, applications of AI that are beyond more narrow kind of human human scope? So if I start with sort of this idea of what we typically talk about human in the loop for, so there's a lot of research around this need to expand the aperture of talking about society in the loop. So this is beyond sort of just this human oversight of a very uncontested example of AI. I think a good example for on the left would be something let's think of, like, an airline pilot, right, overseeing the autopilot of a plane for the general safety of the passengers.

It's not that easy, obviously, as we know in society. We need such broader ways of encoding societal values and kind of shifting towards often conflicting goals and values within society. So the heterogeneity of stakeholders, is ever more complex. So we need to sort of land on an ethics for how we look at AI in more societal and broader contexts, a nice challenge. So some of the things that I'm using about recently, and I think it's really important when we look at kind of the AI conversation is a lot of this is already before you get to sort of data ethics.

We'll look sorry. AI ethics. We're looking at the origins of sort of data ethics here, and there's been a lot of, recent sort of momentum, I think, around this in terms of this idea of social quantification through data. Right? We're in this interdependent sort of information ecology that's ever growing, ever more pervasive, And we're having these data relations that are creating sort of a new capitalism, a new social order.

And I know my fellow panelists are gonna have really interesting on this from a more ethics domain. But I think things to really reflect on, and we might get to this in the discussion today is how that's bleeding obviously into things like the stability of democracy. I think this is a very, very tangible one in the data economy. The emergence of sort of false consensus, and misinformation is a really interesting one. But what I'm interested in also is how do you kind of compound that, you know, data data ethics view with the need for how we design with data?

So as a designer, everything now is about data manipulation, right, and AI is an example of this. And what's really interesting is this switch from sort of the product service paradigm as well to sort of the vast systemic change that we need when we're looking at the poly crisis, climate change, the deepening sort of economic recession. These are big interconnected challenges, and we need to look at radical changes no longer, unfortunately, evolutionary change. So the design community is talking more about this idea of transition and understanding that through design, we are essentially world building, but within the constructs of sort of the anthropocene, we're building sort of one world. We're not building multiple futures for different civilizations.

So I think it's interesting to think about the connection here between data and designing this idea of ways of being in the world. So that's something. And something that I've been speculating on generally is the need for more sort of countercultures when we look at this idea of the data economy. Data is the new oil, for example. And there's this idea that if you look at sort of those very capitalistic sort of data relations that are entrenched in the way we think and the way we exist today, How could we look at a more ethical data relation to one another and within society?

So this idea of maybe like an ecological shift, that's not so extractive, for economic expansion, but potentially for regenerative ecological preservation. And what does an ecology of scale look like to sort of counter this idea of the economies of scale where you increase volume, you reduce kind of unit cost? How about increasing diversity of representation through data and also looking at new propensity models that actually share the value of what that data has gone on to create back to the people that provided that data. So a recent speculation that I think has a big calling for kind of a new design rhetoric. And decolonial AI obviously is from the origins of this.

So how do we take that data coloniality and then look at sort of the semantic discontinuity that happens when you aggregate that data and when you obviously use it within algorithms for decision making. So there's been some seminal work around a lot of the teams at DeepMind on this particular paper really got my attention that was looking at the need for kind of new research cultures as using decolonial approaches to the to the development, but also deployment of AI. And they looked at particular sites of coloniality being kind of within the decision system themselves, but also largely this idea of ghost work. So when you're kind of doing data labeling, and all that kind of preprocessing, that is often done out of context, right, without the domain context for where the algorithm is gonna be distributed. So there was some really clear cut interesting examples that were starting to sort of codify the size of the challenge in addressing kind of AI in the society context.

So I found that very inspiring. And also over the weekend, I was reading an article by one of the data scientists from the United Nations. You may know him, some of you, Miguel Louengo Oros. And he said we forgot to give neural networks the ability to forget, which I find superbly interesting. And any Blade Runner fans might notice this image.

It's kind of a bit of a geeky one, I guess, but kind of Deckard obviously is always, contemplating this idea that, you know, Hari's memory is implanted. And I think it's a really interesting point is, you know, we're building all of these neural nets and deep learning algorithms, but what about when you want to retract the data that you've given to one of the parameterizations of this model and you don't want it to be to be considered in sort of the aggregate, learned behaviors. So this idea of, like, deep unlearning is something that I think is gonna be getting a lot of attention. And how does this contribute also to things like I think there's a word going around called forgetful advertising as well, which looks at can you actually retract data you've given to sort of social media that has tailored sort of the advertisements that you're seeing? I mean, a super, like, complex problem is how do you retract data points that a model has been trained on.

So something that got my attention over the weekend that I just found really fascinating. What's also happening is I think from a complexity perspective, the approach we've currently got to AI is very singular. Right? We're looking at it as a sort of superintelligence. It's very monolithic.

Whereas in nature, when we look at intelligence, and I've used the, like, example here of, like, a swarm of birds, like, I think murmurations are quite tangible to everyone, but we see this sort of self organization of ecosystems of intelligence, which is not how we're building AI, which is quite unusual. And neuroscience has obviously set the course for a lot of the kind of the deep learning research. But I think what's interesting now is is reorientation towards this idea of shared or what you wanna call it collective intelligence and whether that potentially has within it some new design patterns for ethics and how we might see the interdependency of different forms of intelligence coming to play. And I think the question that was posed in this recent paper was really interesting. We need to move away from sort of the, I guess, look at how does intelligence present to us as researchers, more to what is it that intelligence must be given that intelligent systems exist in a universe like ours.

So if I shift gear a little bit and I'm conscious of the time, so I'll try and speed this up then. But other side of the coin now is well, what's the huge opportunity that we also gain through the ability to, I guess, disclose hidden dimensionality through AI? And I think the theme of today's panel is seeing the unseen. So I think this is quite a nice way to frame it. But something I've been doing some research on recently is this idea of deep design, and this is really kind of a, I guess, convergence of different approaches.

So coming from sort of a complexity background that we can't just model everything, for example, the economy as being homogeneous. It's made up of many heterogeneous agents of different beliefs, different values, different goals. And typically, our economic models are run on the idea of a representative agent. Right? So we don't actually model for the difference in society.

So complexity gives us an ability to codify, a simulation based on heterogeneity and how things are interdependent. And then also deep reinforcement learning is able to give us new mechanisms for looking at how we optimize for multiple objectives. So an example I'll use is how do we look at a fairer tax system where we drive social equality at the same time as maintaining productivity of the state, for example. That's a multi objective problem, right, because there's 2 very different, often conflicting goals. And what reinforcement learning can do is help us understand the trade offs and also simulate those trade offs in new ways.

And then human centered design in all of this is very important. If we're talking about modeling and economy, we need to understand the behavioral dynamics that underpin it, but also this idea of mechanism design. So if we want to create, for example, a tax policy that is able to better balance, productivity and social equality, we need to do something in the model. We need to enforce the right policies that give that outcome. So it's kind of like a reverse game theory, and I think this is where designers really come to the table on these new sort of AI based simulations and understanding what do we actually wanna achieve?

How do we encode societal values in what we're building? So really it's a deep learning approach, this idea of mechanism design, which is very entrenched in modern economics. And kind of coming back to the framing that I mentioned at the start around this idea of a social contract or society in the loop mechanism. What this model actually does is it in the loop mechanism. What this model actually does is it allows you to actually explicate what those values are through the idea of rewards.

So I'm sure many on the call are aware of, like, reinforcement learning, the fact it's reward based, and that it incentivizes agents to maximize rewards. Imagine making that reward the equality of sorry, the trade off of the productivity and the equality of social welfare, you might actually get a dynamic that can get somewhat close to an optimal tax policy. And so briefly kind of under the hood, I don't wanna go too much into the details, but I'm happy to share more offline if you're interested, but it's kind of looking at a 2 level reinforcement learning problem, which I think the teams that are doing this, this is really the bleeding edge of simulation for sort of socioeconomic complexity. But you're basically running a simulation of agents that you kind of, codify the goals, constraints of, and the base dynamics around, so like rules of the game. And then you're putting a really intelligent, like, governance algorithm on the top that can go after the sort of social values that we want to achieve within, a given context.

So it's kind of a new way of engineering these problems. Big shout out to the teams that are doing this. I think, there's a lot of research communities spread across, Harvard, spread across Oxford, Salesforce, and many others at the moment. But there was a really interesting one that got in the press quite, quite massively and rightly so the AI Economist, which was solving this problem of balancing social productivity sorry, productivity and social welfare. And this was run-in the Americas, and it was they found that the model actually, produced a more optimal trade off at 16% more than like the US based tax model.

Obviously, the challenge now is kind of putting that into the real world, which I think is the call to action for designers as well to understand how do we, you know, safeguard the translation of these models into real, complex, settings. Another research endeavor that I'm involved in at the moment is applying the same mechanism to global climate cooperation. So looking at agents as regions of the world and understanding how do we define better negotiation protocols between countries that gives a net positive gain in terms of reduction in temperature and carbon emissions whilst not limiting the GDP of the country. So they're looking at continuing economic growth, but balancing it with meeting the climate transition. And this is a very open community endeavor.

There's lots of climate policy makers, anthropologists involved in this. And I think that for me is really where I see this kind of arc of ethicists coming to the table, data scientists, but also domain experts, and many other kind of alternative research cultures, I guess. So I will stop there. I'd be very happy to share more on some of the work that I mentioned as well. I've also started collating some of this in a GitHub.

So if you're interested in some of the simulation models in the community that are doing these cool things, please check it out. But otherwise, over back to you, Ben. There's so much in there. So thank you, Sofia. Some of the things that struck me were this, new social order, ghost work and labeling context, unlearning, natural intelligence and that comparison, what values we're encoding as well as, like, economic growth and baking these assumptions in to the kinds of algorithms we're making.

I'm hopefully, we'll get some questions. So please use the question q and a from the audience and and really ask those really important questions. We've got a few coming in right now. So thank you very much. Our next speaker is Alex Joseph, a data scientist at Locally, an expert in kind of fake news, hate speech, all these amazing things that are happening right now that we should be aware of.

So thank you, Alex. Take it away. Thanks, man. And thank you, Sofia. That was super interesting.

Before I get into my sort of quick tool, I'm super interested in this transition from a dominated society to a more ecological society implementing some complex systems, views into the way we build our society. So, yeah, would really be interested in in discussing that at some point. But, yeah. So a bit a bit about me. So I've been working in the online online harm space for a few years now.

My job has basically been to apply AI to, clean up, the online environment in various ways. I started my journey working in the misinformation space, looking to combat misinformation in the UK, Argentina, and various locations in Africa. Misinformation is a term that I think most people will be, familiar with, especially since the COVID pandemic. But I think people are often surprised at just how much damage it can do. I think one particularly graphic example comes to us from, India, Delhi to be exact, where during the pandemic, there were a number of rumors that were, proven to be false, that the local Muslim population was spreading coronavirus.

And as a result of that, it led to a number of violent attacks where, in the subsequent violence, someone actually lost their their lives. So misinformation is obviously something we want to, crack down on. That was just one example. There are many others. The good news is that there are ways to combat misinformation.

Fact checking has been proven to be one of the best, methods, against against it. Fact checks have have been proven to reduce belief in misinformation, and those who read fact checks have been shown to retain factual information weeks after this. And this was proven, across different political environments, cultures, and countries. So fact checking is really a tried and tested method. The problem is each fact check, can take days to research and then publish.

So the challenge really is to speed up fact checking to meet the demands of the social media age where misinformation is spreading that much faster. I have been building tools to sort of facilitate that, not to learn a number of ways. Firstly, I've helped build tools to help packet fact checkers spot misinformation faster, allowing them to get ahead of the curve, so to speak. Secondly, I've I've helped build tools to spot repetitions of claims that have already been fact checked, allowing a fact checking team to get the most juice out of any one of the fact checks. And then lastly, I've helped build fully automated fact checking systems capable of fact checking macroeconomic claims without any human inputs.

Again, a massive a massive time set, but the moving away from misinformation from a second, but still staying within the sort of online harms, space. More recently, I've been focused on the hate speech problem. So, as we all know, social media makes it easy to, easy for all of us to express our opinions, our thoughts instantly online. But the problem is when disagreement arises, it can often result in hate speech being directed, individuals or groups. In extreme circumstances, hate speech online can manifest offline, resulting in people being harmed or killed.

In a recent a recent study, it was showing that, hate hateful rhetoric online can make political violence more likely, give violence direction, and increase fear in vulnerable communities. In a in another study, coming out of America, it was showing that hate speech against Muslims led to a 32% increase in real world hate crimes. So, again, that misinformation, hate speech is something that we really want to crack down and and, are building hate speech detection algorithms to detect things like racism, sexism, religious hate in online content, and I've been sort of learning just how to form a problem. It is hate speech, and, misinformation for that matter is often spread by malicious users. These could be, trolls or state backed accounts, and these malicious users are intentionally spreading, this content.

And in in their sort of aim to spread malicious content, they will do anything they can to evade evade detection by the algorithms that that could be deployed to sort of, detect them. They might do things like, replace some of the letters in an offensive term with numbers, or perhaps they might, write their post in one language, let's say English, and then use offensive terms from another, let's say French. So, recently, one of the things I've been thinking about is what AI visibility means in the online harms context, and the ways in which we might approach making these algorithms more transparent, more visible, while still preserving the mystery needed to not give the whole game away and not to sort of empower any, malicious malicious user that may be trying to avoid and beat these algorithms. So, yeah, thank thank you. I think that's all for me.

Thanks, Ben. Thank you, Alex, so much. Yeah. I mean, I I feel like, the hate speech transferring to the real world, the fact checking, there's some, like, light and darkness in there. Right?

So I think hopefully we'll balance that out a little bit later as well and get to the methods and things that people are doing. It was really super interesting. So I'm just going to quickly share the poll results from the, the first poll that we had, which was the interest in AI ethics. We've got lots of people who are using data as using AI ethics in their data science work, which is awesome. So it's really nice to see that.

Some people who are in an advisory side, or consulting and other people who just have a general interest. No one ticked the ethics washing one, so thank you everyone. You're you're not trolling us today, so that's really nice. And we'll put up the 2nd poll in a second as well for you to do that. So our next speaker is Alice Waite, and she is a amazing person, a founder of the and philosopher at, Hattusia, if I'm pronouncing that correctly, senior lead for ethics at OMG and Omnigov.

Take it away, Alice. Hi, everyone. Hi. It's fantastic to be here. It's also really good to see the results of that poll.

When I started in this field, there are very few people on the advisory side of AI ethics, or as a consultant. So it's very cool to know that there's at least 15 people in this room who's doing this job. And for that reason as well, I think I can hand on heart say that the caliber of conversation that we're having now around ethics is so much better than it was 2 or 3 years ago. I think when we were in these conversations 2 or 3 years ago, at least when I was there, it was mainly just talking about the fact that, you know, there's two sides to ethics, and it's very complicated, and maybe we should be thinking about it and that kind of thing. And now to see some amazing research coming through and companies taking this super seriously and actually saying this is, yes, it is complicated, but so is so many parts of work.

Right? So make so is so is so many parts of labor. An artist, for instance, doesn't just stare at a blank canvas and hypothesize all the different ways that they could go. They just, you know, they start working and they create something. And that's that's what we're doing now with ethics is, yes, there's a lot of different opportunities out there that are available, but, there are frameworks to get started, and we are doing better now, I think.

At least in this community, I think sometimes when you look at the news, you can feel a bit disheartened. But if you are I don't know. Take comfort from this community. Take comfort from the fact that this is, something which is turning into a profession and it is an established field. So to those people who are perhaps using data, using it for their work, or perhaps looking at this from a general interest point of view, I just wanted to say a couple of words, about what the profession is so that you can think about, you know, whether or not you either want to become an ethicist or, the sorts of questions that you should be asking managers or hiring, hiring personnel so that you can start thinking about bringing these people onto your team.

Because, ultimately, if you are a data scientist, I'm not saying you shouldn't be thinking about these questions, but you've already got a considerable amount of expertise. Right? And to ask you to take on another, you know, degree's worth. I mean, there's people who've spent PhDs 9, 10, 12, 15 years just looking at very specific questions within ethics. I think it's kind of unrealistic to explore to expect you to take on all of that extra work, and instead you should be working alongside people who have these kind of specialisms.

So, I think Sofia already touched on this, really, and Alex gave a flavor of it as well in his, presentation. If you are working in the field of ethics, you might have many different specialties and methodologies. So, for example, I tend to use the philosophical method in my work. I, also have a degree in social sciences, so I'm kind of aware of the social science methods in order to understand information to, make sure that policies are as inclusive as possible, to make sure that we're including a wide range of perspectives on ethics as possible. But there's also historians.

I've, spoken with people who specialize in English literature and so looking at literary perspectives on ethics, musicians, artists. There's this phrase called digital anthropologists as well, which I think is really taking off digital social scientists. All of these people are people who can help you in your work to understand, how can we push this towards something which is working for society as opposed to something which has a very narrow objective, which might be around, an individual company's profit. And so that is the first thing to say is that there is going to be so much diversity here because, you know, the range of diversity that exists within social science and humanities is is also quite extraordinary. So that's the first thing to say.

And the second thing to say is that, when we're working in businesses, you you might already have a degree in one of those subjects. Right? And you're like, oh my gosh. I'm already, you know, already got an anthropology degree or something, or I've already got a degree in an international relations. So how do I then transition into something which makes this applicable for the workspace?

That's when you start looking at all sorts of different methods as well in terms of business transformation, because, essentially, what we're asking to do is businesses to transform in a way that they develop AI into something that does prioritize these other metrics as opposed to just prioritizing maybe that profit margin before or something which was a bit more narrow. So you've got to think about business transformation. I use quite a lot of psychological methods in my work as well. I'm not well, actually, that's methods in my work as well. I'm not well, actually, that's probably another group of people who would be really good to work alongside as an ethicist, but, in ethics.

But psychology has such a massive part to play in trying to understand business transformation. And you also need to have a degree of sales skills. You need to have an amazing, like, you have to have empathy with everyone. You have to be able to listen. You have to need to understand, and you do need to sell in and up the value of the work that you're doing.

So that's something else to consider when you're thinking about ethics is it's not just the case at the moment. I mean, I've said we're, you know, established, but we're definitely not developed. We do still need to push the value of what we're doing the whole time back to businesses and the organizations that we're working with. So that's that's just a bit of a flavor of the sorts of things that you might be thinking about when you're thinking about this idea around ethics, and, I'm looking forward to hearing what everyone else has to say. Thank you, Alice, for that super succinct and awesome talk.

Getting into getting the buy in almost into AI ethics, how AI tech ethics and data ethics, how it's progressed and over the last few years, and the kinds of people and methods that we can use to all take advantage of hopefully making this situated situation better and for society not just for capitalism. We have another poll which I'm going to share with you now, which is to what extent we are using ethics today, using ethical frameworks or processes. Some people are using those. We have many more people talking about ethics internally, which in my books is awesome. Like Alice is saying, you know, we've just come quite far from and people and businesses are taking notice of this now.

So that's really, really good. Not yet started slightly, you know, on the edge there. We could do something about that. Don't know. Not thinking about it.

It was interesting. I want to hear from you about, like, why why maybe you are, at the starting block. What kinds of things are you worried about, or do you just not know where to start maybe? So that'd be really interesting, to, you know, get some more questions from you as well. I'm just gonna spend a quick few minutes just to discuss a few things, that I've got kind of rattling around in my brain, and then we're gonna open it up.

So please do put more questions on the q and a. I'm I'm pointing that way because that's why I'm like I can see them. That would be really awesome. So, I'm Ben. I've been running the Machine Ethics podcast for 7 years, and I've been really fortunate to talk to some people who may be on the panel.

Lots of amazing people who are either really interested in this area, doing things, developing things, or have written books and such, in AI AI ethics, and AI's impact on society. And some of the things that really captivated me is obviously the kind of here and now of situation, working with these models, trying to extract the best outcomes when we're working on these data science pipelines, which I'm sure a lot of you will be familiar with kind of the terminology, taking some idea through to production and creating a new AI service. But there's all these other kind of fringe things like machine ethics, like how do you program ethics, and what does that actually mean into a system. There's obviously kind of transhumanized transhumanist ideas around like singularity and kind of how this technology is going to change us as humans, as well as just ideas around machine consciousness and indeed human consciousness, as well as like more abstract things like simulation theory and evolutionary AI and all these, like, fun things around the edges. One of those fun things at the moment, which is just exploding all over the place in my kind of ears and my Twitter feeds and all sorts of things, and I'm sure yours too, this idea around, kind of generative AI, image generation, text generation.

There's lots of these models out, and it's kind of typified by ChatGPT, for example. And I'm trying to roll along kind of a presentation about some of these ideas and what it means for AI ethics and kind of the interesting positives as well as negatives around some of the developments at the moment. And you might have seen things around plagiarism, kind of ripping off someone's style, direct reproduction, so just taking someone's work wherever it be text or images, and just kind of reproducing it. It's very hard to fight against that sort of thing when we ask for specific outcomes. We ask for the Mona Lisa, for example, when it's going to try its best to give us something which is representative of the data.

And it's sort of it's these sorts of things that you might have heard of. But then there's also these other things like the filtering problem. How do you filter bad language, maybe nudity, maybe harmful content when you're presenting it to, you know, as a service to the general public? You can go and chat to chat to GTP. You can go and use Midway and DALL E too and all these sorts of things right now.

And it's interesting to me that like some of the other lightning talks, a panelist pointed out that we have fact checkers and we have these systems in place that are filtering out information from these systems. And it's interesting that, you know, they have the the authority at the moment to to, you know, take certain things out and not others. So it's checking the checkers as one of the questions on the chat points out. It's also really interesting to kind of appreciate how we're moving forward with these technologies. How is it going to affect us in the long term?

You know, what does these systems actually mean for how we're going to live our lives in the future? And one of the things I've been kind of playing with at the moment is that we have these interactions where we type into a computer or we move a mouse around. And these are kind of the or maybe scroll. Maybe just scrolling. Maybe our most interactions are scrolling these days.

Maybe I'm wrong. It's just scrolling. And and we've got this new interface, right? We thought we had it with Siri and, all these other kind of chat interfaces. But they were taking our information and and doing some back some searches and and put cobbling together some answers.

With this, we're we're taking kind of our information and we're putting it into some latent space and transforming that into, you know, what is the next thing that we wanna hear. The things that we see in the image generation, say Midway is the one I've used most extensively, You're taking that kind of texture information that, it could be vocal information. And that's getting transformed into again this latent space and getting pumped into a image generation. I'm waving hands around. Sorry.

But, image generation, part of this larger network. And it's it's just really interesting to me what those other things are gonna be. So you could just, take that image part out and transplant it with something more like, some sort of computer based diagnosis system. So you could ask your large computer network, how are you today? And it would be able to kind of put that into latent space, pick up all the logs that it has, all the things that's been tracking, and give you a natural language, output.

And that is a way that we could interact with computers. But it might not be just computers. It might be robots, and it might be, robots that's in our house. And it might be robots that are able to interact with us with language and tell them about themselves. And these are the sort of things which will be happening, and I think it's very interesting.

And I'm yet to kind of find the the edges of the situation. But I just thought I'd put these some of these things I've been thinking about out there. But do check out more of that sort of thinking, the podcast, and on all these different places that you find more information, especially around AI ethics. So with all that kind of, all that said, thank you very much to all the speakers for presenting such great kind of wealth of different types of, areas and information. If I could call you all up and we'll we can start with a starter for 10 question, if it's okay.

So if you could put your, cameras on. Thank you. Hi, everyone. Hello. Yeah.

Hi. So I've got one which is very close to my heart, and I think I'll probably start with you, Alice, and then we can move on to anyone else who wants to pitch in, which is around, this idea of kind of buy in for AI ethics. If you're working in a company, how do you kind of think about maybe you're not doing anything in this area at the moment, but you would like to have some buy in in a company. And maybe where's the place to start and maybe the kinds of things that you might be doing, as a stepping stone, kind of process and methods, some some things that you might want to look at. Yeah.

So I think I think I think the first thing is to say, it's kind of like it's been it's been in the q and a a bit. And also I think Marie kind of touched on this as well, is the idea that ethics shouldn't be a replacement for regulation. You know? And at the moment, I think that a lot of companies are equating ethics with compliance in some in some way. And, actually, when you speak to people about, you know, what is AI ethics, they'll come back to you with a lot of things that are actually in the GDPR.

They'll say, like, transparency, accountability, and all of these things. And I think the key thing to say is here and this is what I said. You know? I don't you might be coming from academic institutions where ethics means something very different to you. Like, I I I fundamentally specialize in ethics for for profit and not for profit and charitable sectors, so non academic sectors.

And what I try and say is ethics should be kind of your competitive advantage. So you're not trying to replace regulation. You're not trying to in fact, there should be better regulation. Let let's be let's be super clear on that, and that should go through the democratic process. We're in a democratic country.

We don't want businesses to suddenly replace. They'd be like, oh, no. We don't. You need democracy because, actually, we've got these ethicists in the room. That's not the case.

It's like ethics is using different methods from, you know, the humanities and the social scientists to create competitive advantage because that should be where your products are differentiating. You know? Like, at the moment, I don't know if anyone's come across this idea of closed world discourse. But the moment, the way that we see AI is just so narrow. You know, we're just we're so we've got such narrow ideas of what technology could look like.

And if you look at closed world discourse, it really has an amazing theory as to why that is the case. And, actually, what ethics should be doing is expanding out all of the possible scenarios, all the possible things that we're doing. And how can that not be competitive advantage? And aren't we living in a hypercompetitive world at the moment with this is then when I get in my sales mode. Right?

The cost of living crisis. We need innovation. We need to kind of, like, have policies, which means that you stand out compared to your competitors. So where do you get buy in from ethics? There's you know, I I love the innovation angle.

I love it because most tech companies think they're highly innovative, but I just see the same replication of the same tools again and again and again. The second one is, you know, I think it was in the q and a, is is managing the risk a little bit. Like, ultimately, the big fines from the ICO are about to start taking off. We started to see the first class action policies where people are not complying with GDPR, so you do need to manage your risk. And the third one is around building trust.

So how are you going to build trust with your stakeholders? That's a less tangible, Beckett. I think lots of people talk about trust but don't have theory of trust. Another one of my little grapes. But so those are my three things around getting by it.

Also, just momentum. You know? Like, it's just a classic thing. Like, if you are interested in ethics, find those 5 other people in your department who also care about ethics. Start a lunch lunchtime, book club or something.

You meet up every month, and then that that then momentum translates into change. And I've seen this with some you know, calling out a great company that's done this, Sky. Sky created, the digital ethics network. They're called The Den. And then suddenly, you know, 18 months later, they started hiring their first digital ethicists.

Like, this really does work. So the buy in is crucial, and everyone in this room has a part to play. Like and it is super empowering. It's really exciting and, these are the positive news stories that we need. Did did anyone else have any kind of sidestep opinions on that sort of that question?

Thanks, Alice. Yeah. I think just reflecting off the back of that from from my lens of working a lot in kind of enterprise tech, Ryan, and big tech, there's sort of this, I guess, top down approach at the moment. I think you were scratching at this, Alice, as well as, like, the obviously, the elective sort of AI ethics officers at Big Tech. Right?

I mean, this is something we're seeing commonly and the implementation of a code of AI ethics at companies. But I think what's absent is this bottom up, what Alice is talking about, right, this emergence from embedded teams around this topic. I see it personally myself as it needs to sort of come from where kind of human centered design has already got its foot in the door with enterprise innovation. I think there's a bigger call to action now in sort of embedding this broader approach. And also the I can't I can't escape what Alex Alex said as well about the narrowness of our conception of AI in general.

And I think this is where we fall over more often than not is we have a very sort of I think I mentioned it like a monolithic view of, like, superintelligence, replicating human intelligence, making it very domain domain specific. Whereas I think we need to move into something that's a bit more like a constellation, right? And I think that needs to happen bottom up with ethics embedded like a governance from within, not just this sort of top down, you know, board level ethics message that we're commonly seeing. So that's just my 10¢ on how I'm seeing it within sort of the tech and product side of things. Sorry.

Thanks. Can I can I just come in because, there was something you wanted to say, Ben? And, I can't believe I haven't done this before as a philosopher because, we always like defining things really up front as well, and I really like that Marie gave her definition of ethics. And my definition of ethics, it's not just mine. It kind of comes from it comes from, obviously, a lineage of many different philosophers, is that ethics is the study of how to live.

So it's the study of, how people should interact with each other, how, people should interact with the environment, how people should interact with the environment. And it's the reason why it differs slightly from what what Marie said is because it's not just about not causing harm. It's about creating structures that actually then enable people to thrive and enable us to thrive in environments. And, also, it kind of sticks to the idea that in western ethics, because I'm not an expert in Shinto ethics or Ubuntu ethics, like, there's so many different ethic ethical frameworks, is that it can be down to logical, which is action based. It can be consequential, consequentialist or utilitarian, which is consequential.

It can be embedded in virtual ethic theory as well. So just I can't believe that I did that didn't do that at the beginning. But, I think also us acknowledging that, like, when we talk about ethics, we might all have very different ideas what ethics is and then where we move forward. So that was just a little thing I wanted to say because if without it, I'd I'd I'd kind of have to go and, like, rescind my profession, basically. Yeah.

Yeah. I think I think, we're we're all beating around the bush about the fact that, there is there is ethics in this, way of thinking about this this world we're living in. Right? How do we actually implement stuff which has good outcomes because we're thinking ethically. We're building this future in a certain direction.

We could be building in a different direction. Right? And it could be bad, but we're just using this ethical thinking to steer it over this way some more. That's the way I kind of like one of the ways I like to think of it is, you know, we have all these options. You know?

How can we leverage the best situation that we possibly can? Hopefully, not just for, like, profit, but for social good cohesion, wellness, flourishing, all these interesting words. I want to come back to one of the things that you said actually, Marie, if that's okay, which was around accreditation. I'm really interested in, you know, it's been brought up before and you mentioned it and I haven't heard it being used for a little while. So I just wondered if you have a strong opinion about accreditation.

There was also this idea about fact checking the fact checkers. That was one of the questions in the chat. And this idea of legislation coming in as well. I don't know if you have an opinion about legislating, data data ethics or data science. Yeah.

Yeah. Happy happy to jump in. So, I think the fact checking question, first of all, do you fact check the the fact checkers? I think any sort of fact checking organization that's worth worth its own sort of thing, I think, that you would expect them to ex explain the reasoning behind the fact check within the fact check itself. So I think the answer to that is we all, as a society, fact check the fact check.

Because if if a fact checking organization makes a mistake, they should have sort of processing processes in place for a fact check to be challenged, and so that is one mechanism. I think, there is also, like, accredit accreditations. Sorry. So, like, there are, like, the international fact checking network, which is, like a almost like a government body or or fact checking organization that maintain the sort of, basic standards of fact checking, which which is important. I think going going back to the the the hate speech question.

So I think there's a question about whether we want to change people's sort of worldview or stop them from, harming others sort of thing. It and, I do appreciate this is, like, a very, difficult topic, And I I think it does come come down to to your work. You said there's there's a right answer. But, I think for me personally, we do we do we don't want I don't think anyone should be subjected to hate speech. I'd say that in quotation marks because there's, like, a single definition of of hate speech.

But I I think there there there are broad overlaps between different, definitions. So I think we do have enough there to sort of say this piece of content is likely to be for us, hate speech by 90% of the UK population, for example. And I think, yeah, we want to clean up so called online discourse and remove, cases where, anyone is on receiving end end of of such such comments. I think I think that's one of those ones that could keep running, and we should, like, have another session on just, like, that aspect of, like, cleaning up the web or cleaning up Internet discourse. That's really interesting.

Just that's a title, isn't it, for for a session? So yeah. Awesome. Did anyone have, anything to add to that? I've got a few more questions I've got in my bag here.

I'm seeing I'm seeing blank faces, so I'm gonna keep going. So there's there's so much we could talk about. So I'm trying to get a good breadth of opinions. One of the things that we had, at the beginning of this was the idea that people are using this technology now. Right?

And a lot of the people who will be in the audience will be using or thinking about or tinkering with algorithms, AI models, etcetera. And it is one of those questions where how visible or how transparent do you have to be is is quite a pressing one for people using this technology. Then if people want to take that, what does that really mean for a business, or a public sector company at the moment? I mean, I I can have a go at that. Yeah.

Yeah. Yeah. That'd be great. And I I imagine, Sofia might have a a few things to say about that as well. You need to be completely transparent.

There is no, situation in my experience or that I've come across in academia or with the complex models where you should be saying it's a black box and you don't know how it works, and you're not sure what's happening. Because if that's the case, then how are you putting that into society? That's dangerous. And I've seen models that people think the outcome is correct when actually the model's been doing something completely incorrect. And when it's been looked into, it's it's not worked correctly at all in the way that people perceived.

So it was doing it was technically doing the right thing, but for the wrong reasons, and that's not what we want because that can cause issues. So you need to be following best practice processes and making sure that what you're developing is is fit for purpose and is not gonna impact in a negative way on anybody at the end of it. And that means that you have to be a 100% fully transparent with the entire process of build, testing, and implementation. Yeah. I guess just following on from, I mean, everything Marie said resonates right.

I think it's paramount in the development process, but also the deployment process as well. And I think this is where a lot of people become unstuck because they think that just explainability ethics is where it ends. And it's actually having those sort of, like, feedback loops that come back in to kind of find particular around, like, I'm gonna say chat GPT, right, in the tuning. But having that sort of mechanism that comes back in, that's not yet been articulated very well. So there's sort of a I don't wanna call it a lip service to transparency, but that's my sense of it.

It's very much about the development process, but it's not about deployment and kind of either writing on the model. So I think being able to mobilize this idea of a participatory process where you're actually involving the citizenry in public services that it affects, right, and recalibrating the models is something we don't have a mechanism for at the moment. So it's more the operations of how do you do that well? How do you do that at scale? How do you do that across multiple domains?

And I think we haven't quite scratched the surface yet of what that means, like transparency with action. Right? So that's something I'm getting a sense of that we need to certainly evolve. I'd love I'd love to follow-up on that actually because I think you both made some really good points about the fact that transparency is kind of an instrumental good. Like, it's instrumental for sorry.

That's my next meeting coming up. It's instrumental for something else. And that instrumentalization could be accountability or it could be trust. And for each of these things, like, transparency doesn't offer the full picture for you to get there. And there is, like, a real focus on transparency.

And I think that, Alex, you know, quite a lot of what you're doing is around that transparency. Right? It's making sure that people have enough information. Everything you just said about the fact checkers and the fact checkers that are worth their salt are engaging in transparent practices. But, you know, you can't say the buck stops there.

You need to think of as instrumental or something else as opposed to, like, what we call an inch intrinsic goods. So an intrinsic good is good in and of itself. And for that, you might say freedom or justice or peace or love or something, whereas transparency is instrumental. That's that's that's all I'll say in this brief time. So we're gonna get to peace and love, AIs, pretty soon.

That's that's what I'm aiming for. That's where we're going. Thanks, Alice. So I think I might come back to you if that's okay. There's this idea that we we've been playing around in the questions as well, and we've been talking about ethics, and we've been deciding that that it has this business application and that we should be thinking about our social impacts and outcomes and all these sorts of things.

And we have some tools that we can use to do that. But there's this question around higher level thinking. You know, is there certain aspects that we should actually not use this technology at all because it is too detrimental. How will we know essentially if that is the case? You know, who who is in charge of making these decisions.

And it appears that, you know, business are in charge of making this decision at the moment. So I I find that whole kind of existential, idea of where we're headed and and in whom's hands are we headed with quite interesting. How much time have we got? Just just just just a small one. Indeed.

Indeed. Yeah. Okay. I think I think there are I think there are a couple of technologies, which should shouldn't really be developed. I think the one that I think that's pretty much unanimous you it's unanimous.

Unanimity? I don't know. It's unanimous in kind of, like, the field is facial recognition, in the fact that that is a technology that should not really be deployed at all. It should not be used at all. And with that, then kind of emotional recognition and these kind of technologies as well.

Whenever you say that, you always have someone come up with a really niche example, super niche. Like, facial recognition is actually really good at identifying early stroke patients. You know what I mean? You're just like, okay. But that's then contained in such a small sphere.

I mean, you know, that's fine to keep that as an exception, but I think facial recognition is is generally, shouldn't shouldn't be in operation at all. And I know that there's some other foundational research areas where a lot of academics will be like, oh, you know, it's just foundational research. Like, we don't know what the applications are gonna be yet. Blah blah blah. And you're like, I think we're pretty sure what the applications are gonna be.

And, actually, the funding of these things should be kind of considered because it's not just business. It is also academics, which then admittedly are then funded by private in interest and stuff. But this this is basically very complicated space. So, hopefully, I've done justice to that question in 90 seconds. But, yeah, I think that facial recognition is is is is a prime example of the sorts of technology that should just definitely not be used.

Yeah. I think just following on from that, I I remember, there being a Kaggle competition. Right? And it really struck me about this Kaggle competition that, one, The the challenge was to take a face and recognize similarities to a subsequent face to whoever they were related. Right?

And on the face of it, this was quite an interesting challenge. But then you look at the organization running it and the people who are financing that organization and the kind of lack of, like, let's say, details around where the data is coming from, what they're going to be subsequent subsequently using the the research for. And you get a bit unstuck with, you know, actually, this is probably a very dubious, you know, thing that we've got up here. And can we challenge that this should exist at all? And I think that's it's sort of striking and awful that things can pop up in all sorts of places like that.

And I think you can have your own ideas around it. But like you say, I think there are some things which are almost too detrimental. Or and you might find, you know, if you're gonna go utilitarian, the weight of the the bad outcomes outweighs the good in some of these instances. So that's definitely something that I've experienced anyway. Well, we're coming up to just about 9 minutes left.

There's been loads of activity actually from the panelists here on the question and answers. So if you actually have asked a question you might have some answers or if you haven't been looking at all, check it out because there is some really great thoughts from our panelists on there, which may not transition transition onto the video. That's what I'm afraid of. We're coming up to a final question. But before that, I was wondering if we could quickly explore the unlearning because I just thought it was really interesting.

Do you have any thoughts about how how one unlearns things? I assume that's coming up me for my yeah. The Miguel comment. So I I find this really interesting, and it's not something I think has been given a lot of airtime. It's this idea of deep unlearning.

I mean, I'm coining this term now, right, because that's how I'm thinking about it. But it is an interesting fact because I think it comes back to this idea around, like, the semantic discontinuity with data. Right? And writes over data when it's aggregated, which is something that is not currently regulated is, you know, when data is transformed and applied to infers certain, you know, decision rights, etcetera. Like, what's the data owners kind of rights within that?

So there's already that kind of core layer that hasn't been addressed. Then add to it the complexity of when your data is whether it's a photo of your face, your voice, whatever it's been used to aggregate some sort of, you know, neural net that learns on a pool of, you know, a plethora, like in the millions of data. And you might say, well, okay, well, removing, you know, my face from that learned information, That neural net has no implication on the output, which was probably true depending on the size of the dataset. But how do you actually unpick and regress that? I mean, I have not seen any research around this, and I find this fascinating.

And I think it speaks to also the traceability of, you know, deep learning in itself and how this works. But I think it it it it poses the broader, more accessible question, I think, of aggregation logic, and how things are transfigured in in the services that we're using and what it means for the origins of where this information is coming from, potential misinformation as well. So it really brought my attention because those are the sort of conversations I don't see happening enough. And I think we need that discourse around understanding as well and how embedded and entrenched some of these data sources are in in kind of the the amazing, but very, very, yeah, intimidating technologies we're fast seeing evolve. It's like we need an act to critic method for our own sort of societal understanding of these things, which we don't have.

And maybe this is where the ethicists will sort of drive this new, paradigm shift. So, yeah, I find it a really interesting speculation and thought experiment, but interested to get these guys's thoughts on it. If I'm going to give my 2¢, I think he goes into that plagiarism thing I was referring to earlier on as well. So if you were if you were able to ask the question of the of the model how much did my images or how much did my data come into the output of this specific thing, then you could you could maybe monetize your data in that way in these models. Or you could at least know or like there's things that you could do there where you could somehow unpick that data, train it against that data so it's negatively correlated with that helper.

So there's there are things that you could do, but I think I don't have a good view onto the kind of materials, the the research that's happening because there's just these models are are vast. Right? So I think the the amount of training and and interesting work there is gonna take a little while to trickle through to to actually see some outcomes. If anyone in our audience knows any amazing research in this place, please drop the papers in the chat because I'd be fascinated. And I love how you phrased it like like ant like noise canceling of neural nets, like find the antiphase and then negate it.

There's something in that. I think it's super interesting. Anyway, I'll be quiet now on unlearning. So I'm worried, at the time. These conversations can last, for hours, and I wish they would.

So thank you to all our panelists. If you would leave us all with kind of like, this, you know, nearly half of the people in the audience have said in the poll that they are, working in this area. They're working in data science and they may or may not use ethical frameworks or kind of methods or they're at least interested in this area because they're on the online and and watching us right now. You know, what can you leave those people with? If you're tempted to do something, one thing right now, what would that one thing be, you know, whether it's looking at something, listening to something, going and doing something, what what kinds of things would you advise?

Okay. So I've kind of got two sides to this. I think maybe I'm a bit biased against my own kind of positioning as well, but put yourself in a team of multidisciplinarians, anti disciplinarians, right? I think me just being on this panel has been so insightful to be around such a diversity of voices. And I think within the engineering community and the digital community, we don't have enough kind of heterogeneity on the team.

And that was kind of what I was speaking to in my lightning talk. Right? It's kind of these new research cultures. They urgently need to happen. So find people that cover all of these different dimensionalities, right, and start conversations with them.

And then secondly, I would try and plug a little bit the example I gave around, this AI research project for global climate corporation. Take an interest in it, take a peek at it. I showed my GitHub link, which has some of the models that are gonna drive that. It's super interesting. I think it's where we can see research communities across anthropology, across ethicists, across data scientists, across designers coming together to take on really big policy and economic challenges.

So I would encourage you just to have a peek at what's going on there. There's some really interesting people involved. Yeah. And attend more of these discussions. I found this super insightful.

Thanks, Ben. It's not very often I get to have a really good discussion with people on this topic, so I've really enjoyed today. I I like listening to the new kind of things that have been developed, in the area as well. So I'm gonna go and check out a few things that Sophie has been talking about. And I think I could probably talk to Alice in a pub over about a million drinks about philosophy, and we could argue all day long.

And I've really learned from Alex as well about what, you know, fact checking is, you know, what's going on and how it should be. You know, I can't remember what what Alex said, but it was about looking at you know, when you're fact checking, you need to be able to know what you're talking about before you can fact check it. And that really kind of struck a chord because I thought that's really what needs to happen. So thanks for that. Cool.

Yeah. So just, in response to your question, Ben, I think, one thing that I want people to take away from this is the concept of red teaming, and it's a concept from cyber cybersecurity. And it's basically, an exercise where you put on the cap of someone, that could potentially use your algorithms for for, malicious purposes. I think it's a really useful exercise that is especially useful when in applications like hate speech detection, where you're thinking about making these, algorithms more visible perhaps. But you do need to think about how that can be misused and stuff like that.

But red teaming has applications pretty much anywhere. So, yeah, that's what I want people to take away. Yeah. Oh gosh. It's come it's happened again.

What would I say is best takeaway? Like, generally, these like, we talk about these jobs. They don't actually really exist at the moment. Like, have a Google search open for ethics jobs. You will see them.

They're generally quite mid management senior. If you are currently working in a company, make that job happen for yourself. You know, put that into your objectives. Show, like, attend, you know, attend these events, pick up some books. My favorite books that I'm recommending all the time to the moment is Stephanie Hair's Technology is Not Neutral.

It's an excellent introduction. I'm sure that everyone has some really good books. I love Tolton Gillespie's content moderation book from Custodian of the Internet, for instance. Environmentalism and AI. You've got Kate Crawford's, Atlas of AI.

Like, there's so many resources available. So you just, you know, put it into your own objectives and own that domain. And I'm telling you, that's gonna be really hard. So I've just told you something that's really gonna take you, like, a year to 18 months to achieve. And the other thing I'm gonna tell you is when you get there, make sure you are paid the same amount as a data scientist.

Otherwise, you will not be taken as seriously. Our skills are so you heard me list off all the things that the type of the things that we need to be able to do. That that job description should be paid the same amount as data scientists. So value yourself, do the best you can, celebrate the small wins, and make the job happen. And, yeah, I'm sure everyone on this panel would love to hear from you because we love supporting people who are trying to get into it.

Thank you so much. That is the end. We're gonna drop our mics shortly. Thank you very much to our panelists. It's again, we just keep going, and we're just gonna go to the pub now.

Right? So we'll see you all there. And hopefully, we'll see you at the next one of these, the next one will be next week, but also the next ethics one, which we'll have, I imagine, in the next 6 months or so. Thanks for everyone. Thanks for, the Data Science Festival for, putting us on.

And if you wanna access the video from this session, they'll be, distributed to you and they'll be available on the website. Again, thanks for everyone guys and we'll see you again in the future.